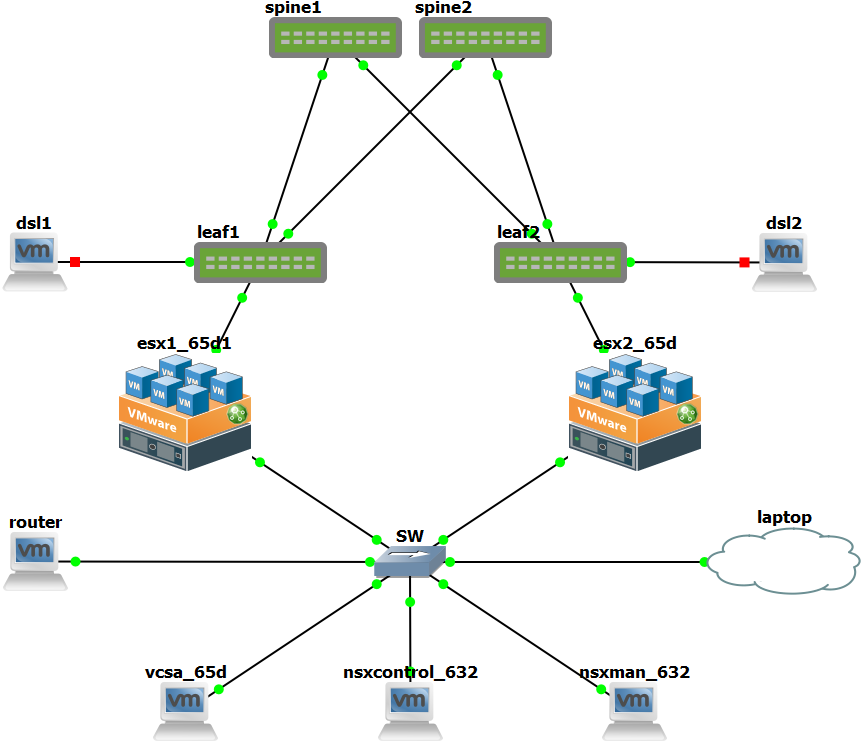

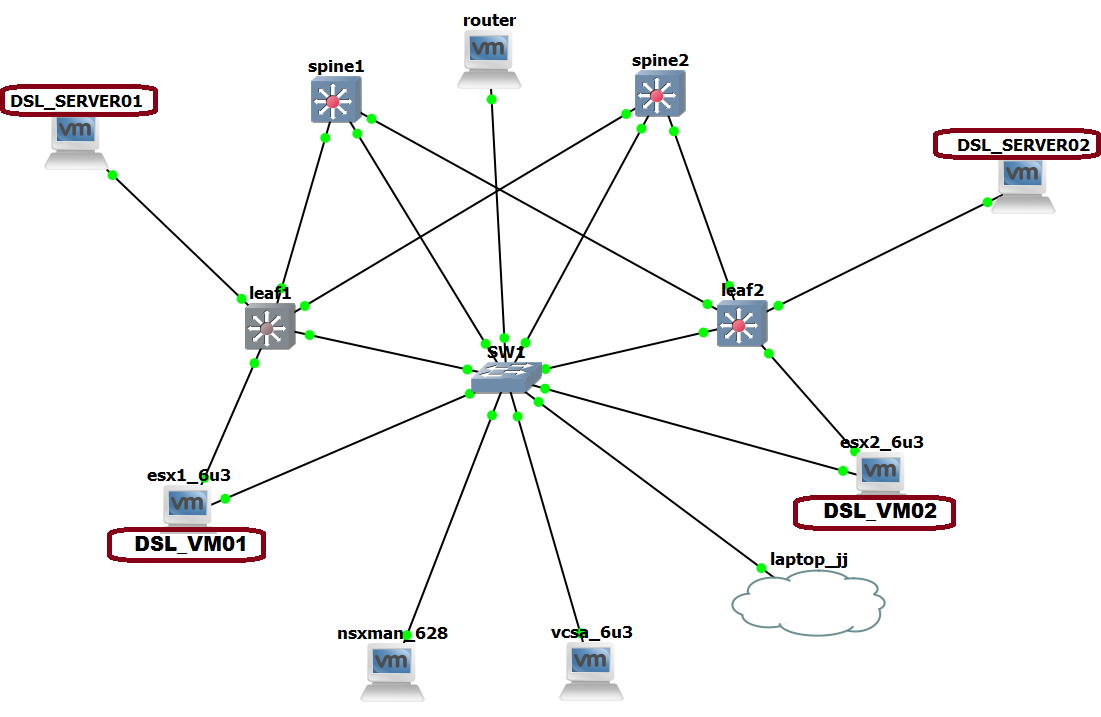

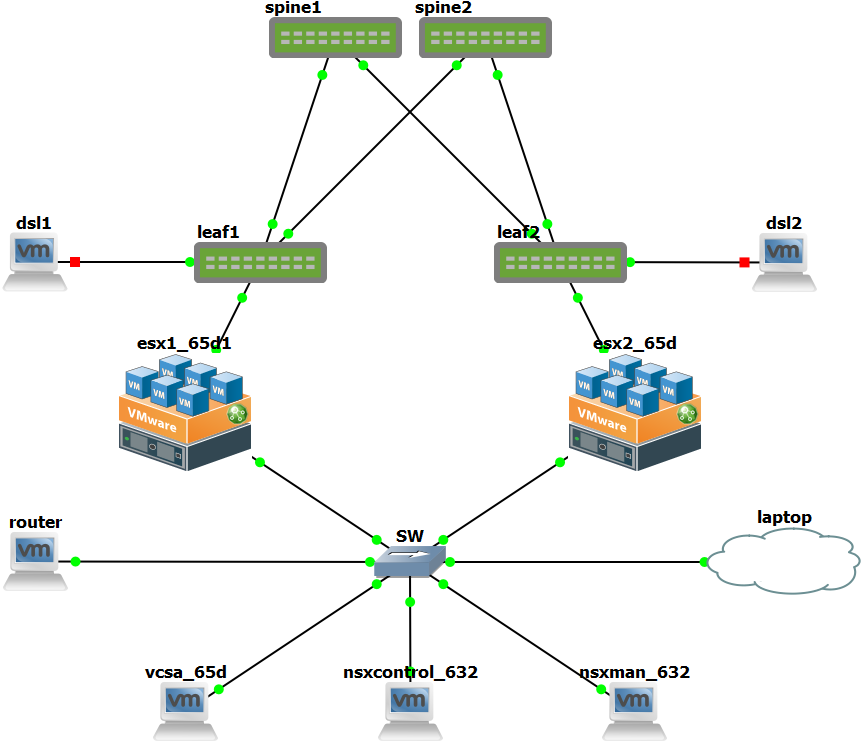

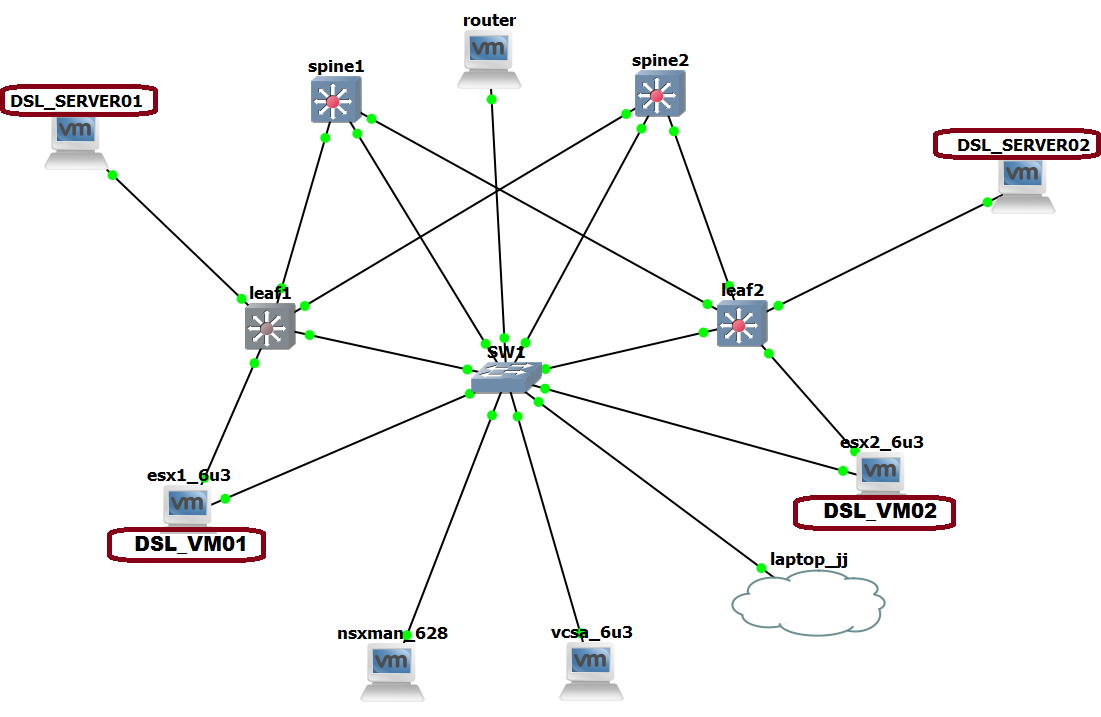

2nd in these series is setting up a virtual Cumulus VX Clos-fabric and connect it to our NSX setup from our previous article, including VTEP integration!

For this we need to expand our LAB environment:

- Freesco Router (for dns, ntp and internet)

- Management switch (gns3 builtin)

VXLAN Router (Cisco 3640) (not really a vxlan router, but creates an L3 hop between the ESXi hosts, and so it routes the VXLAN packets)- VMware vCenter Server Appliance 6.5.0d

- Two VMware ESXi 6.5.0d hosts

- NSX Manager 6.3.2

- NSX Controller 6.3.2 (3 for HA, but 1 works just fine in the lab)

- Two test vm's (we chose alpine linux for this occasion) running on esx1 & esx2

- Four Cumulus VX 3.5.0 switches consisting of two spines and two leafs.We started building the lab on Cumulus VX 3.3.2 though, and it's possible the configuration differs somewhat between the two. One big change between them is that Cumulus Linux 3.4 and later releases replaces Quagga with FRRouting.

- Two test "physical" servers (we chose damn small linux) connected to the leafs

Lab IP Address plan

| MGMT Network |

|

|

|

| Default gateway |

10.10.100.1 |

|

|

| DNS |

10.10.100.1 |

|

|

| Domain |

cumuluslab |

|

|

| |

|

|

|

| router |

10.10.100.1/24 |

|

|

| spine1 |

10.10.100.11/24 |

|

|

| spine2 |

10.10.100.12/24 |

|

|

| leaf1 |

10.10.100.13/24 |

|

|

| leaf2 |

10.10.100.14/24 |

|

|

| vsca |

10.10.100.20/24 |

|

|

| esx1 |

10.10.100.21/24 |

|

|

| esx2 |

10.10.100.22/24 |

|

|

| nsxcont |

10.10.100.23/24 |

|

|

| nsxman |

10.10.100.24/24 |

|

|

| laptop |

10.10.100.30/24 |

|

|

| |

|

|

|

| VXLAN Network |

|

|

|

| esx1 vxlan vmk1 |

10.10.101.1/30 |

MTU |

1500 |

| leaf1 vtep |

10.10.101.2/30 |

MTU |

1500 |

| esx2 vxlan vmk1 |

10.10.101.9/30 |

MTU |

1500 |

| leaf2 vtep |

10.10.101.10/30 |

MTU |

1500 |

| |

|

|

|

| VXLAN Client Network |

|

|

|

| alpine01 |

10.10.200.11/24 |

MTU |

1400 |

| alpine02 |

10.10.200.12/24 |

MTU |

1400 |

| dsl1 |

10.10.200.13/24 |

MTU |

1400 |

| dsl2 |

10.10.200.14/24 |

MTU |

1400 |

SPINE & LEAF interface configuration

| SPINE1 - /etc/network/interfaces & NCLU |

SPINE2 - /etc/network/interfaces & NCLU |

# The loopback network interface

auto lo

iface lo inet static

address 10.10.102.11

netmask 255.255.255.255

# The primary network interface

auto eth0

iface eth0 inet static

address 10.10.100.11

netmask 255.255.255.0

gateway 10.10.100.1

# Uplinks

auto swp1

iface swp1

auto swp2

iface swp2

|

# The loopback network interface

auto lo

iface lo inet static

address 10.10.102.12

netmask 255.255.255.255

# The primary network interface

auto eth0

iface eth0 inet static

address 10.10.100.12

netmask 255.255.255.0

gateway 10.10.100.1

# Uplinks

auto swp1

iface swp1

auto swp2

iface swp2

|

#spine01

net add loopback lo ip address 10.10.102.11/32

net add interface eth0 ip address 10.10.100.11/24

net add routing route 0.0.0.0/0 10.10.100.1

net commit

|

#spine02

net add loopback lo ip address 10.10.102.12/32

net add interface eth0 ip address 10.10.100.12/24

net add routing route 0.0.0.0/0 10.10.100.1

net commit

|

| LEAF1 - /etc/network/interfaces & NCLU |

LEAF2 - /etc/network/interfaces & NCLU |

# The loopback network interface

auto lo

iface lo inet loopback

address 10.10.102.13

netmask 255.255.255.255

# The primary network interface

auto eth0

iface eth0 inet static

address 10.10.100.13

netmask 255.255.255.0

gateway 10.10.100.1

# Uplinks

auto swp1

iface swp1

auto swp2

iface swp2

# ESX1

auto swp3

iface swp3 inet static

address 10.10.101.2

netmask 255.255.255.252

mtu 1500

auto swp4

iface swp4

auto swp5

iface swp5

auto swp6

iface swp6

|

# The loopback network interface

auto lo

iface lo inet loopback

address 10.10.102.14

netmask 255.255.255.255

# The primary network interface

auto eth0

iface eth0 inet static

address 10.10.100.14

netmask 255.255.255.0

gateway 10.10.100.1

# Uplinks

auto swp1

iface swp1

auto swp2

iface swp2

# ESX2

auto swp3

iface swp3 inet static

address 10.10.101.10

netmask 255.255.255.252

mtu 1500

auto swp4

iface swp4

auto swp5

iface swp5

auto swp6

iface swp6

|

#leaf01

net add loopback lo ip address 10.10.102.13/32

net add interface eth0 ip address 10.10.100.13/24

net add routing route 0.0.0.0/0 10.10.100.1

net add interface swp3 ip address 10.10.101.2/30

net add interface swp3 mtu 1500

|

#leaf02

net add loopback lo ip address 10.10.102.14/32

net add interface eth0 ip address 10.10.100.14/24

net add routing route 0.0.0.0/0 10.10.100.1

net add interface swp3 ip address 10.10.101.10/30

net add interface swp3 mtu 1500

|

SPINE & LEAF routing configuration

To turn the Cumulus switches into an EVPN fabric, we enable BGP as the routing protocol.

We establish peering between all neighbors (leaf1 to spine1 and spine2, leaf2 to spine1 and spine2)

| SPINE1 - /etc/quagga/Quagga.conf & NCLU |

SPINE2 - /etc/quagga/Quagga.conf & NCLU |

!

interface swp1

ipv6 nd ra-interval 10

no ipv6 nd suppress-ra

!

interface swp2

ipv6 nd ra-interval 10

no ipv6 nd suppress-ra

!

interface swp3

ipv6 nd ra-interval 10

no ipv6 nd suppress-ra

!

interface swp4

ipv6 nd ra-interval 10

no ipv6 nd suppress-ra

! enabling BGP

router bgp 65020

bgp router-id 10.0.0.21

bgp bestpath as-path multipath-relax

! we configure a peer-group to put all the fabric nodes in and configure their capabilities.

neighbor fabric peer-group

neighbor fabric remote-as external

neighbor fabric description Internal Fabric Network

neighbor fabric capability extended-nexthop

! we configure the switchports to be in the peer-group we just created.

neighbor swp1 interface peer-group fabric

neighbor swp2 interface peer-group fabric

neighbor swp3 interface peer-group fabric

neighbor swp4 interface peer-group fabric

! we enable the default adress-families for L3 routing between VTEP endpoints

address-family ipv4 unicast

! add loopback interface to BGP (this is the VTEP endpoint on this switch)

network 10.0.0.21/32

redistribute connected

exit-address-family

!

address-family ipv6 unicast

neighbor fabric activate

exit-address-family

! we enable the EVPN af this is the EVPN VXLAN control-plane protocol.

address-family evpn

neighbor fabric activate

exit-address-family

!

|

!

interface swp1

ipv6 nd ra-interval 10

no ipv6 nd suppress-ra

!

interface swp2

ipv6 nd ra-interval 10

no ipv6 nd suppress-ra

!

interface swp3

ipv6 nd ra-interval 10

no ipv6 nd suppress-ra

!

interface swp4

ipv6 nd ra-interval 10

no ipv6 nd suppress-ra

! enabling BGP

router bgp 65020

bgp router-id 10.0.0.22

bgp bestpath as-path multipath-relax

! we configure a peer-group to put all the fabric nodes in and configure their capabilities.

neighbor fabric peer-group

neighbor fabric remote-as external

neighbor fabric description Internal Fabric Network

neighbor fabric capability extended-nexthop

! we configure the switchports to be in the peer-group we just created.

neighbor swp1 interface peer-group fabric

neighbor swp2 interface peer-group fabric

neighbor swp3 interface peer-group fabric

neighbor swp4 interface peer-group fabric

! we enable the default adress-families for L3 routing between VTEP endpoints

address-family ipv4 unicast

! add loopback interface to BGP (this is the VTEP endpoint on this switch)

network 10.0.0.22/32

redistribute connected

exit-address-family

!

address-family ipv6 unicast

neighbor fabric activate

exit-address-family

! we enable the EVPN af this is the EVPN VXLAN control-plane protocol.

address-family evpn

neighbor fabric activate

exit-address-family

!

|

#spine01

net add bgp autonomous-system 65020

net add bgp router-id 10.0.0.21

net add bgp bestpath as-path multipath-relax

net add bgp neighbor fabric peer-group

net add bgp neighbor fabric remote-as external

net add bgp neighbor fabric description Internal Fabric Network

net add bgp neighbor fabric capability extended-nexthop

net add bgp neighbor swp1 interface peer-group fabric

net add bgp neighbor swp2 interface peer-group fabric

net add bgp ipv4 unicast network 10.0.0.21/32

net add bgp ipv4 unicast redistribute connected

net add bgp ipv6 unicast neighbor fabric activate

net add bgp evpn neighbor fabric activate

|

#spine02

net add bgp autonomous-system 65020

net add bgp router-id 10.0.0.22

net add bgp bestpath as-path multipath-relax

net add bgp neighbor fabric peer-group

net add bgp neighbor fabric remote-as external

net add bgp neighbor fabric description Internal Fabric Network

net add bgp neighbor fabric capability extended-nexthop

net add bgp neighbor swp1 interface peer-group fabric

net add bgp neighbor swp2 interface peer-group fabric

net add bgp ipv4 unicast network 10.0.0.22/32

net add bgp ipv4 unicast redistribute connected

net add bgp ipv6 unicast neighbor fabric activate

net add bgp evpn neighbor fabric activate

|

| LEAF1 - /etc/quagga/Quagga.conf & NCLU |

LEAF2 - /etc/quagga/Quagga.conf & NCLU |

!

interface swp1

ipv6 nd ra-interval 10

no ipv6 nd suppress-ra

!

interface swp2

ipv6 nd ra-interval 10

no ipv6 nd suppress-ra

! enabling BGP

router bgp 65011

bgp router-id 10.0.0.11

bgp bestpath as-path multipath-relax

! we configure a peer-group to put all the fabric nodes in and configure their capabilities.

neighbor fabric peer-group

neighbor fabric remote-as external

neighbor fabric description Internal Fabric Network

neighbor fabric capability extended-nexthop

! we configure the switchports to be in the peer-group we just created.

neighbor swp1 interface peer-group fabric

neighbor swp2 interface peer-group fabric

! we enable the default adress-families for L3 routing between VTEP endpoints

address-family ipv4 unicast

! add loopback interface to BGP (this is the VTEP endpoint on this switch)

network 10.0.0.11/32

redistribute connected

exit-address-family

!

address-family ipv6 unicast

neighbor fabric activate

exit-address-family

! we enable the EVPN af this is the EVPN VXLAN control-plane protocol.

address-family evpn

neighbor fabric activate

! provision all locally configured VNIs to be advertised by the BGP control plane.

advertise-all-vni

exit-address-family

!

|

!

interface swp1

ipv6 nd ra-interval 10

no ipv6 nd suppress-ra

!

interface swp2

ipv6 nd ra-interval 10

no ipv6 nd suppress-ra

! enabling BGP

router bgp 65012

bgp router-id 10.0.0.12

bgp bestpath as-path multipath-relax

! we configure a peer-group to put all the fabric nodes in and configure their capabilities.

neighbor fabric peer-group

neighbor fabric remote-as external

neighbor fabric description Internal Fabric Network

neighbor fabric capability extended-nexthop

! we configure the switchports to be in the peer-group we just created.

neighbor swp1 interface peer-group fabric

neighbor swp2 interface peer-group fabric

! we enable the default adress-families for L3 routing between VTEP endpoints

address-family ipv4 unicast

! add loopback interface to BGP (this is the VTEP endpoint on this switch)

network 10.0.0.12/32

redistribute connected

exit-address-family

!

address-family ipv6 unicast

neighbor fabric activate

exit-address-family

! we enable the EVPN af this is the EVPN VXLAN control-plane protocol.

address-family evpn

neighbor fabric activate

! provision all locally configured VNIs to be advertised by the BGP control plane.

advertise-all-vni

exit-address-family

!

|

#leaf01

net add bgp autonomous-system 65011

net add bgp router-id 10.0.0.11

net add bgp bestpath as-path multipath-relax

net add bgp neighbor fabric peer-group

net add bgp neighbor fabric remote-as external

net add bgp neighbor fabric description Internal Fabric Network

net add bgp neighbor fabric capability extended-nexthop

net add bgp neighbor swp1 interface peer-group fabric

net add bgp neighbor swp2 interface peer-group fabric

net add bgp ipv4 unicast network 10.0.0.11/32

net add bgp ipv4 unicast redistribute connected

net add bgp ipv6 unicast neighbor fabric activate

net add bgp evpn neighbor fabric activate

net add bgp evpn advertise-all-vni

|

#leaf02

net add bgp autonomous-system 65012

net add bgp router-id 10.0.0.12

net add bgp bestpath as-path multipath-relax

net add bgp neighbor fabric peer-group

net add bgp neighbor fabric remote-as external

net add bgp neighbor fabric description Internal Fabric Network

net add bgp neighbor fabric capability extended-nexthop

net add bgp neighbor swp1 interface peer-group fabric

net add bgp neighbor swp2 interface peer-group fabric

net add bgp ipv4 unicast network 10.0.0.12/32

net add bgp ipv4 unicast redistribute connected

net add bgp ipv6 unicast neighbor fabric activate

net add bgp evpn neighbor fabric activate

net add bgp evpn advertise-all-vni

|

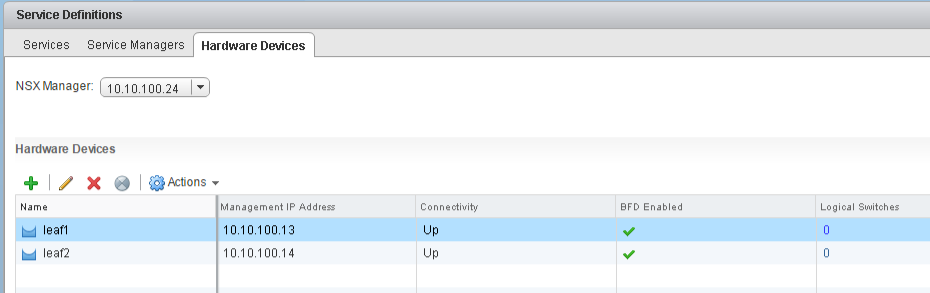

Setup VTEP Integration

The next step is integrating the "hardware" VTEP with NSX.

Now hardware-VTEP-integration is cool because we can manage physical ports from NSX and add these into a NSX logical switch, bringing the physical world into the virtual world.

Also check Cumulus DOCS for a comprehensive step-by-step.

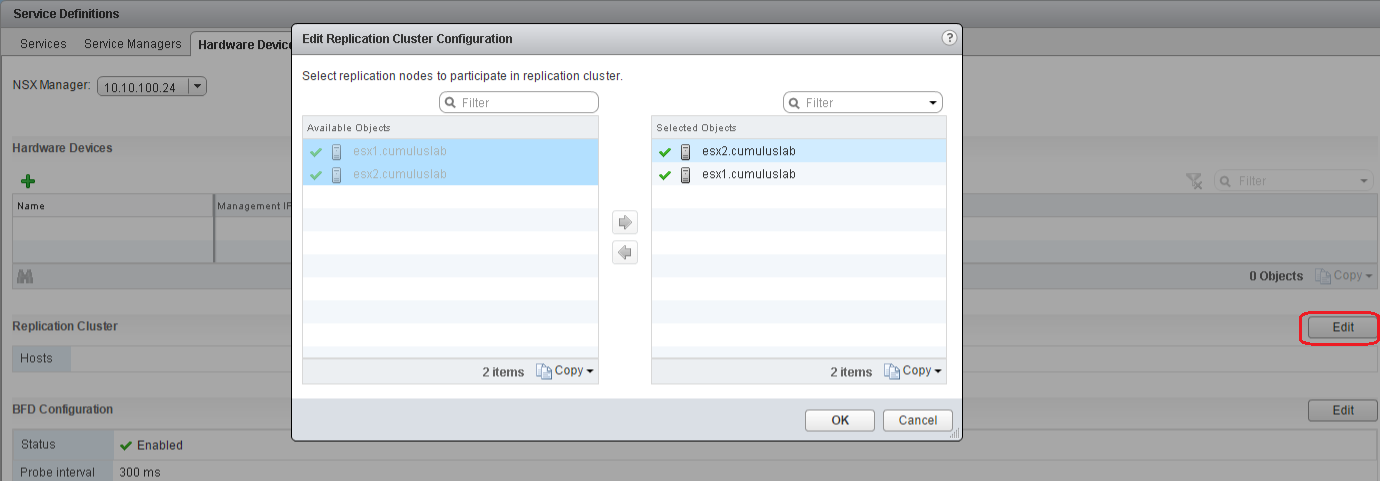

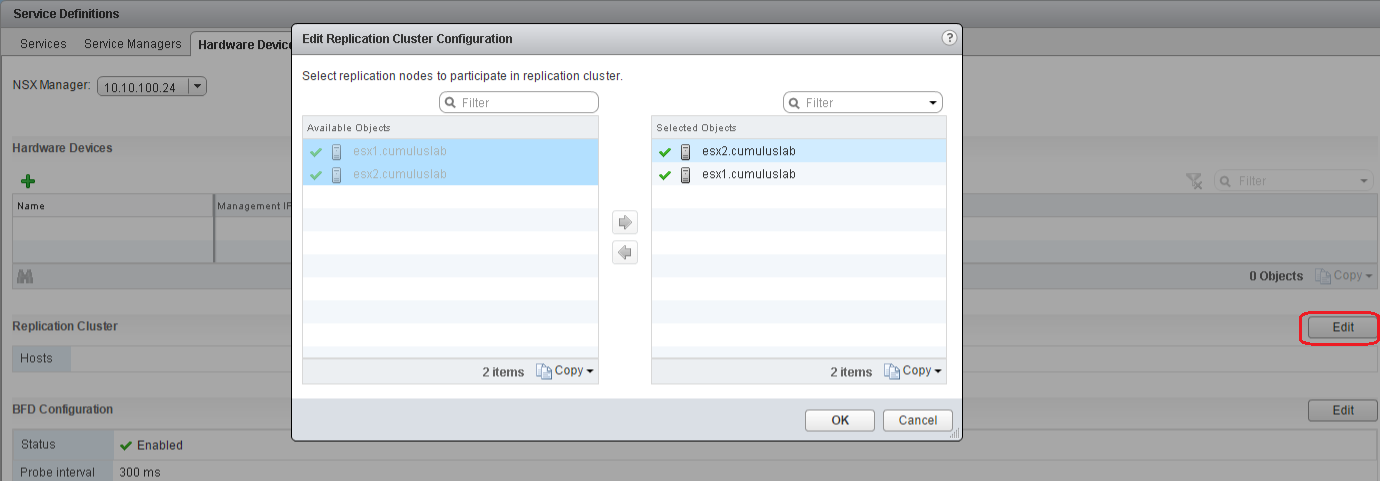

1. Configure the NSX Replication Cluster

Networking & Security > Service Definitions > Hardware Devices > Replication Cluster > Edit.

The replication cluster will be responsible for forwarding the broadcast traffic sent from a hardware VTEP.

2. Configure openvswitch-vtep on both Cumulus leaf switches

Run these command on both leaf switches:

sudo systemctl enable openvswitch-vtep.service

sudo systemctl start openvswitch-vtep.service

3. Bootstrap both Cumulus leaf switches

LEAF1:

sudo vtep-bootstrap --credentials-path /var/lib/openvswitch --controller_ip 10.10.100.23 leaf1 10.10.102.13 10.10.100.13

LEAF2:

sudo vtep-bootstrap --credentials-path /var/lib/openvswitch --controller_ip 10.10.100.23 leaf2 10.10.102.14 10.10.100.14

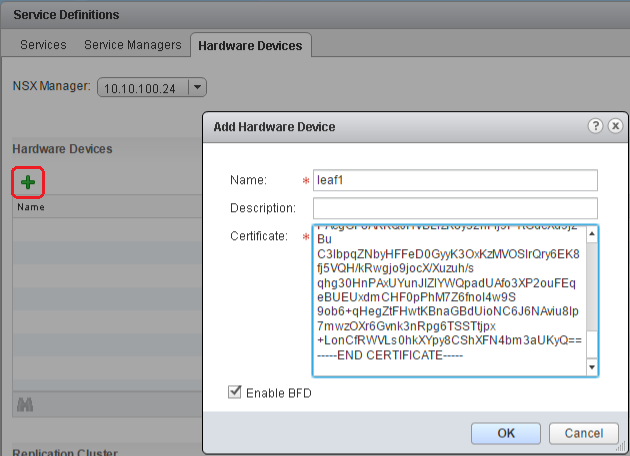

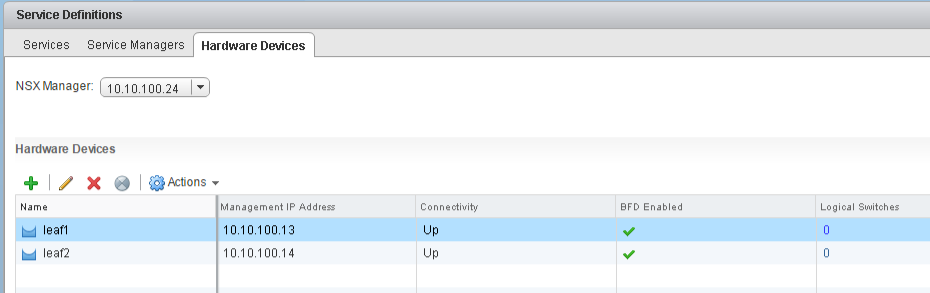

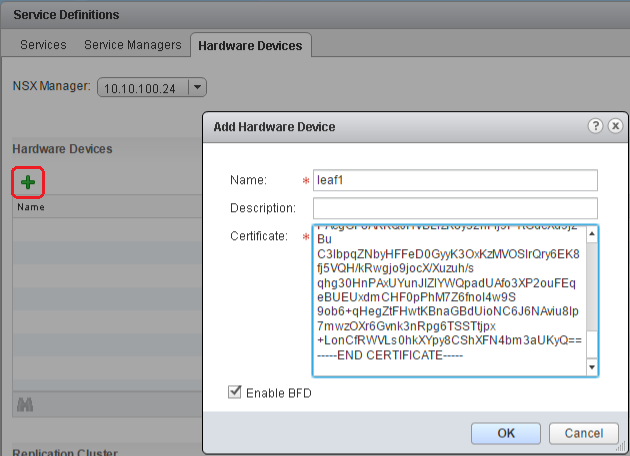

4. Configure the switch as a VTEP gateway

For LEAF1 grab (copy) the public key content from LEAF1:/var/lib/openvswitch/leaf1-cert.pem and add this BASE64 begin/end request to the Certificate field while adding the Hardware Device, also enable BFD.

Networking & Security > Service Definitions > Hardware Devices > Hardware Devices > Click +.

Do the same for LEAF2 : LEAF2:/var/lib/openvswitch/leaf2-cert.pem

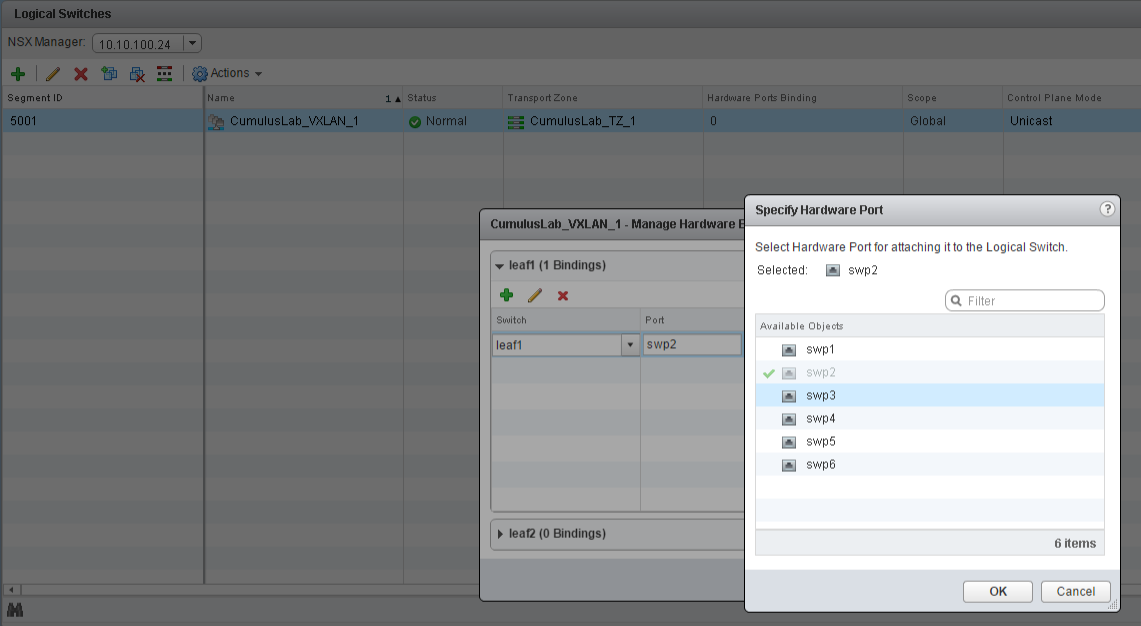

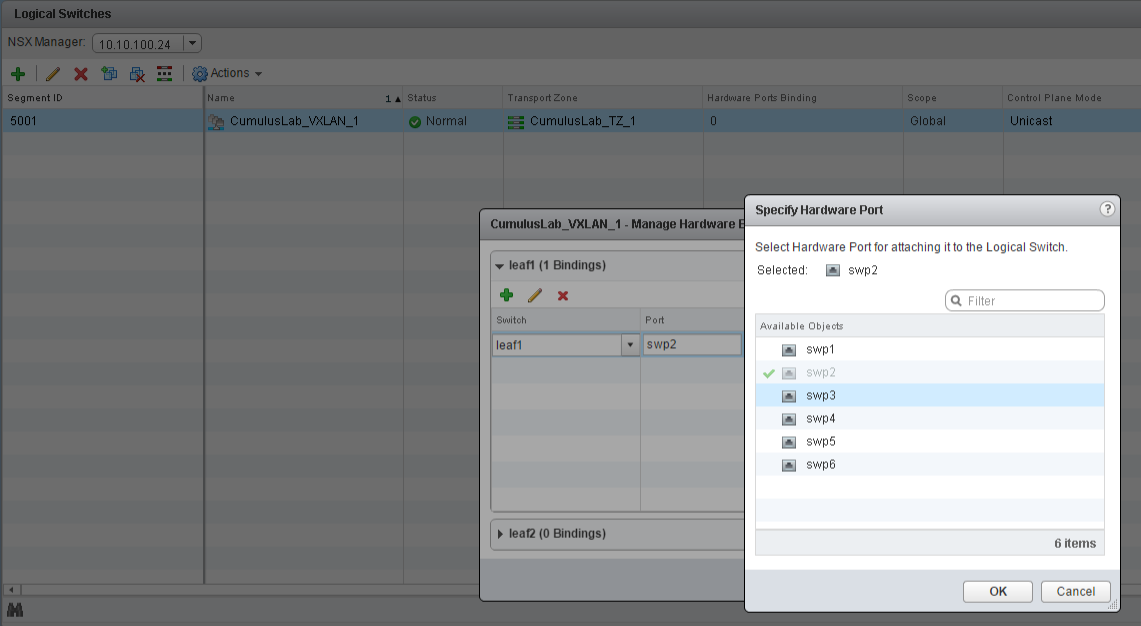

5. Bring the physical server switch port into the virtual world

Goto Networking & Security > Logical Switches and click Manage Hardware Bindings from the Actions menu of your logical switch.

Add leaf1:swp2 (the physical port connected to DSL1) to the logical switch. Do this for both physical servers LEAF1:DSL_server1 and LEAF2:DSL_server2!

Verify our setup

And we're done! Let's look at our LAB and test it!

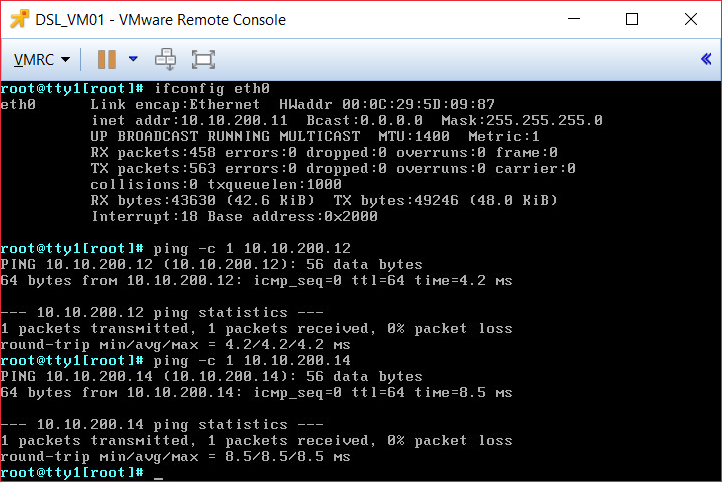

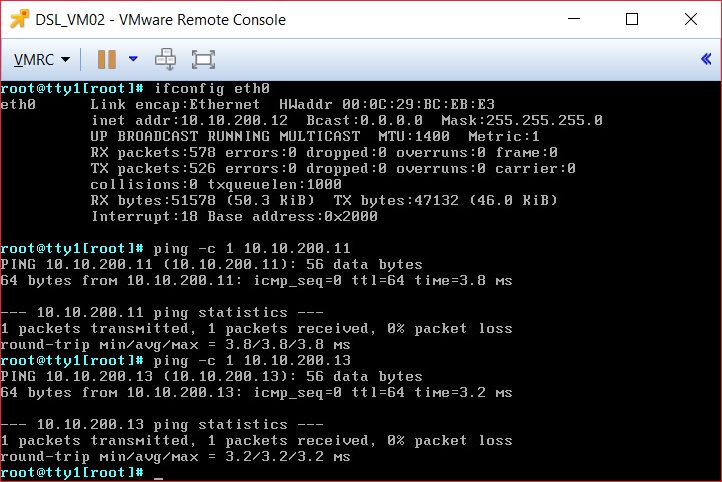

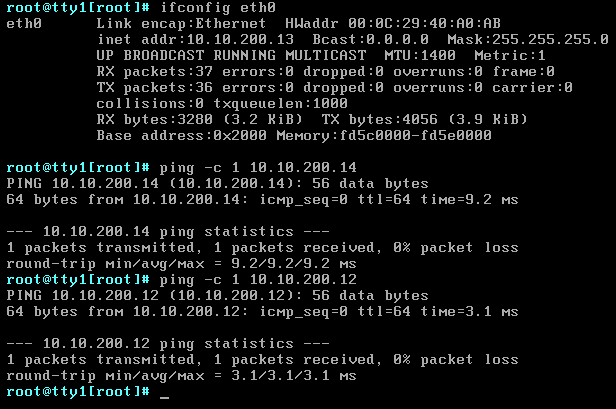

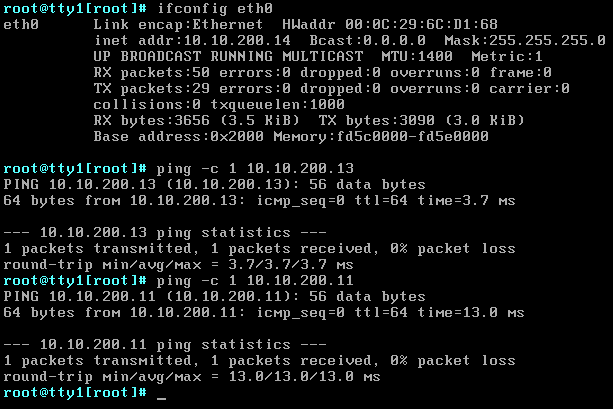

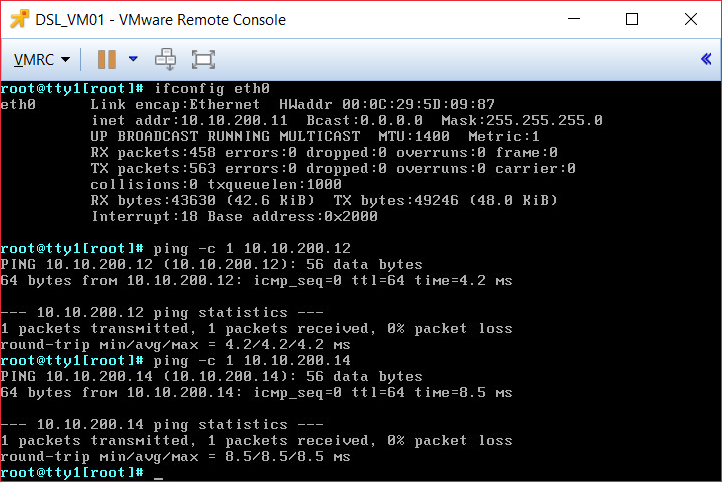

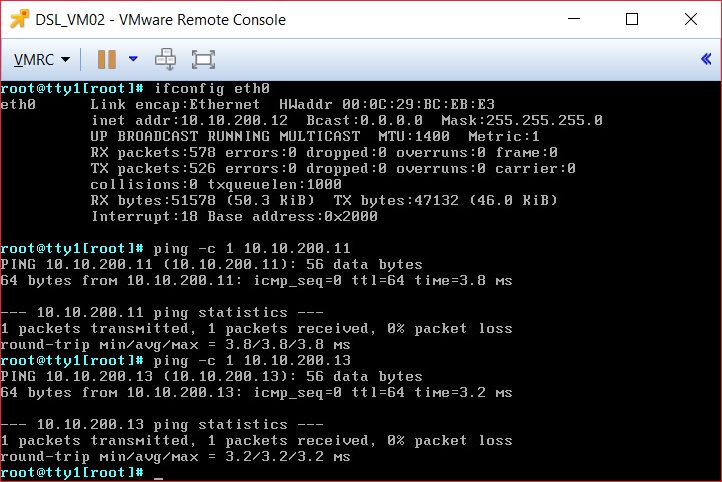

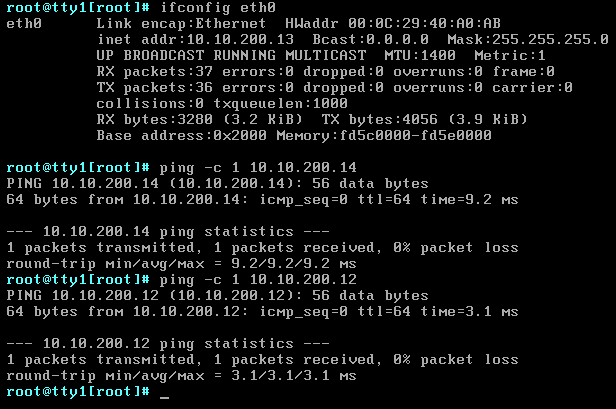

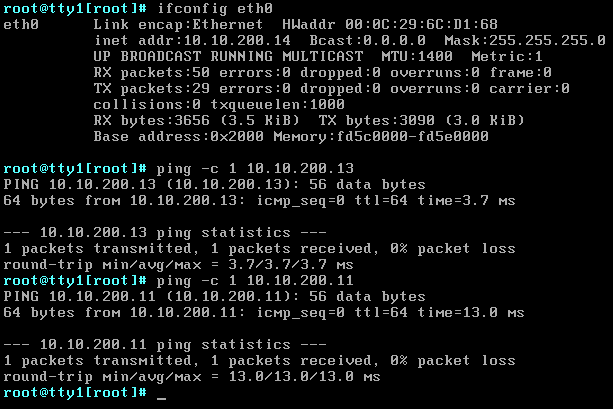

We've got two DSL VM's running on ESX1 and ESX2 and two DSL physical servers connected to LEAF1 and LEAF2. All four nodes are connected to the same VXLAN, this was possible due to the hardware VTEP integration between NSX and Cumulus, cool right?!?

It's an older image, but setup is the same

Let's do some final pings tests:

|

DSL_VM01

|

DSL_VM02

|

|

DSL_SERVER01

|

DSL_SERVER02

|

And done...

Next up is adding redundancy to the network, how... wait and see!

Articles in these series

https://scict.nl/nsx-cumulus-part1-en/

https://scict.nl/nsx-cumulus-part2-en/

https://scict.nl/nsx-cumulus-part3-en/

SHARE THIS POST