Why Server Side Caching saved my ass!

Maybe the hype has passed. But as a user of SSC solutions for the last 2 years a quick comparising and experience sharing is in place I think. The trigger was a quick conversation about this topic i had this week with someone from a company we're doing a PoC with right now (hint: check an earlier post :)

What is server side caching?

I guess most of you will know, but a quick explanation doesn't hurt anybody (or just skip it :)).

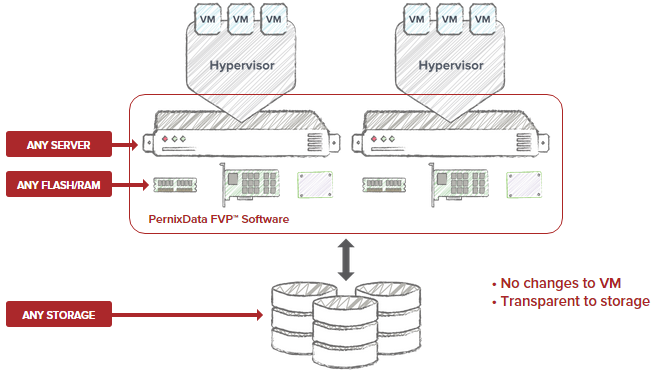

Server side caching or short SSC (at work we called it "SuperSnelMaakSoftware") in a virtualized environment caches I/O requests requested by a VM from the storage array in a local cache within the hypervisor or computer layer usually in RAM or flash. And when that same data is accessed for the second time the I/O is dealt with by the caching layer and the request doesn't have to go all the way down the array.

In generally only for reads, but some solutions also support write caching where the write is being ack'ed at the cache layer in the host and is later destaged to the storage array. A solution with only read caching could have positive effect on writes though, because it gives the array and the spindles more headroom to do other stuff like storing data.

Server side caching works because the most recently used data or hot data is close to the VM. Less hops and no san/ethernet bottlenecks. Which results in lower latency. And the media types used for acceleration (ram and any type of flash (ssd, nvme, flashondimm) all have extremely low latency nanoseconds for RAM and microseconds for flash coupled with extreme high iops; 100k+ per esxi host is no exeption. Also the I/O blender effect gets almost nullified by the speed of flash, it's still there... but flash is just way better in random IOPS and has more available than spinning disk.

A. What happens on a write with only write through is enabled?

A write with a write-through caching solution remains the same besides the fact that it's being cached for a future read.

A write is cached and simultaniously written to the array. The acknowledgement has to return from the array before the VM receives the ack and can continue.

B. What happens on a write with write-back enabled?

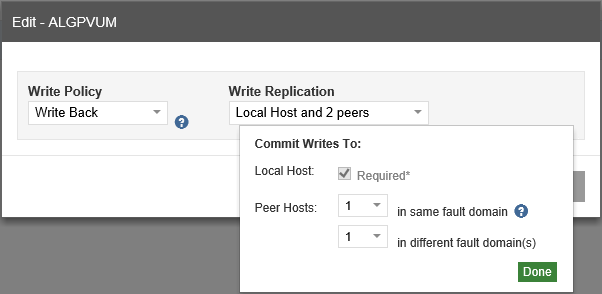

The difference with write-through is that the write to the array is asynchronously and the VM receives the ack when the write hits the cache.

Potentially with some form of fault tolerance; the write that's being cached is also written to 1 or 2 other cache hosts (a distributed cache) synchronously. And only ack'ed back to the client when written in the cache on these hosts.

C. what happens on a cache-hit?

When data is in the cache, there is no need to fetch it from the array and the VM get's the data quickly. Data could end up two ways in cache:

- by a write (check A)

- by a previous read

D. What happens on a cache-miss?

When data is accessed for the first time it has to come from the array. Reason's for this could be that:

- The acceleration by the SSC software was turned on after the data had already been written to disk we call this a first read.

- Data hasn't been used in a long while and has been evicted from the cache

2013

Back in 2013 we struggled a lot with application performance, clearly the Netapp we bought in 2009 couldn't handle the load of the ongrowing virtual infrastructure anymore and it was getting worse everyday, all optimization efforts like block alignment and ramdisks in VM's just weren't enough anymore. The Netapp just couldn't deliver anymore and latency was high (full NVRAM and 1gig networking used for NFS). The environment grew, started with a few douzen VM's in 2009, now easily going over 200VM's. But we had enough storage capacity and therefore didn't really feel the need to invest earlier on in expansion or upgrading the filer just to get more performance, and also wasn't on the budget.

2014

We started looking into various options, we tried out various products back in 2014 like VMware Flash Read Cache (vFRC), Infinio Accelerator, Proximal Data AutoCache (now part of samsung) and PernixData FVP.

We quickly wrote off VMware's own flash read cache, the management overhead and the insights in every VM's data usage needed needed to configure this effectively didn't fit our environment. vFRC is configured on a per VMDK base and the optimal block size for the VM's VMDK needs to be set.

We contacted pernix, infinio and proximal in March 2014. We got the bits and started testing from april to june and finally went with Infinio for a year! Infinio had an easy to deploy solution (matter of minutes without any downtime) an supported NFS, which we needed for our Netapp files. Back then Pernix didn't have support for NFS datastores.

Finally overall performance was at an exceptable rate, SSC fixed the problem we we're then having and "saved" our asses! :)

2015

We felt the need to drive a bit more permanent solution into our datacenter. I liked the way Pernix was going (NFS support, Fault domains, etc). We saw good read io offloading with infinio, but Infinio in our situation added a bit of latency for writes and unaccelerated reads. Probably due to the extra hop of the infinio accelerator VM; Pernix doing write acceleration and using an in-kernel module should eliminate that problem completely! We also got ourselves a Netapp CDoT Metrocluster running NFS so fault domains with Pernix would be ideal!

2016

With our stretched HA vSphere Metro Storage Cluster with NetApp CDoT running NFS, PernixData FVP doing read/write acceleration using the sites as fault domains and corresponding vSphere config with regard to HA/DRS and vMSC site affinity. Now everything is going strong in our datacenter. End-users are getting the application performance they deserve, and we can much easier buy capacity when we need capacity and performance when we need performance.

General use cases, and experience

It sounds cool, but it's not for everybody. It really depends on your needs and on your architecture. If your applications don't need the faster response or higher throughput or if you already have flash in your array you probably won't see big benefits (or any at all), though accelerating with RAM within FVP on a hybrid or AFA could drive the performance up, especially for bigger block sizes. It all depends! And some newer vSphere 6 features are often not supported, like VVOLs and NFS4.1 (session trunking & kerberos). Also check pNFS is not Session Trunking

Adding VSAN support for SSC solutions doesn't make any sense I think. Being a hyperconverged solution, data already is close to vm. writes already go to flash and reads depends; them being hot or cold, and also if your using a normal hybrid setup for VSAN or all-flash. The speed of ram could potentially fasten up VSAN. With an option for a big(ger) read cache to start with and writes going to ram (especially future non-volatile solutions like flashondimm, 3d crosspoint) as second.

Possible use cases?

Off course it depends but probably works best for sas/sata users and for VDI on normal datastores, without things like Citrix PVS and there often is smart integration for things like linked clones. Or off-course when struggling with slower/older storage array performance. Especially when higher latencies are seen. It can extend the lifetime of your existing array probably by one or two years.

Also write acceleration isn't always needed. And complexes the architecture. Also for consistent backup in case of storage level integration for example. And when used in a fault tolerance mode it adds networklatency for the network writes. Which could or could not be higher than your existing array (nvram over 10g ethernet also acks quickly)

Today AutoCache is part of samsung, haven't heard of them since. Infinio news is also lacking. Pernix is still going strong, not only with FVP but also with their last year announced Architect!

Quick feature comparison:

| VMware vFRC [^n] | Infinio | Samsung Autocache | Pernixdata FVP | Pernixdata FVP Freedom [^n] | |

|---|---|---|---|---|---|

| Flash | YES | NO | YES | YES | NO |

| RAM | NO | YES | NO | YES | YES (total limit 128GiB) |

| Write-through | YES | YES | YES | YES | YES |

| Write-back | NO | NO | NO | YES | NO |

| vSphere support | YES | YES | YES | YES | YES |

| Hyper-V support | NO | NO | YES | NO | NO |

| Integration type | ESXi kernel | VM on each host | Kernel module | Kernel module | Kernel module |

Contact me if you have any questions or have an interest in detailed insights in our test results for example for some of these solutions!

SHARE THIS POST